The Rise of Neuromorphic Computing: Revolutionizing AI with Brain-Inspired Chips in 2025

Introduction

As artificial intelligence (AI) workloads grow increasingly complex, traditional computing architectures face limitations in efficiency and scalability. Neuromorphic computing—a technology inspired by the human brain’s structure and function—is emerging as a promising solution in 2025 to overcome these challenges.

This article delves into the latest advances in neuromorphic hardware, its potential to revolutionize AI, and how developers and enterprises can prepare for this disruptive technology.

What is Neuromorphic Computing?

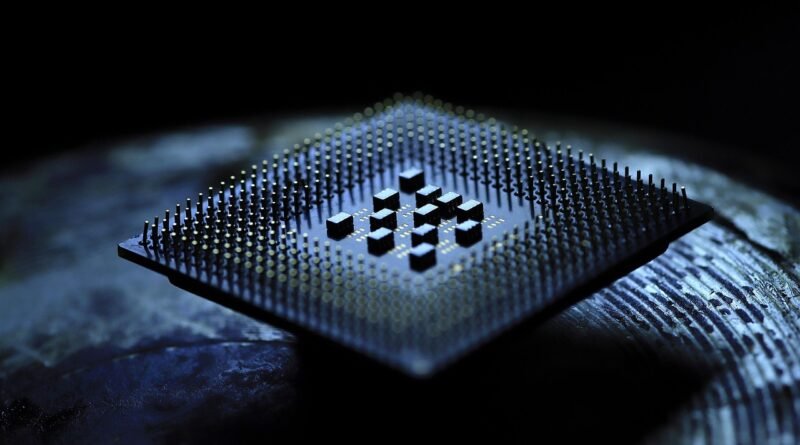

Neuromorphic computing mimics the neural networks of the brain by using specialized hardware chips designed to process information through interconnected neurons and synapses. Unlike conventional processors that operate sequentially, neuromorphic chips function in a massively parallel, event-driven manner, offering ultra-low power consumption and real-time learning capabilities.

Key Developments in 2025

- Breakthrough Chips: Companies like Intel with their Loihi 2 and IBM’s TrueNorth project have released next-generation neuromorphic processors with millions of artificial neurons and synapses, enabling complex sensory processing on edge devices.

- AI Model Compatibility: Advances in software frameworks now allow popular AI models to run on neuromorphic hardware, bridging the gap between neuroscience-inspired computing and mainstream AI.

- Energy Efficiency Gains: Neuromorphic systems have demonstrated energy reductions up to 1000x compared to traditional GPUs for specific AI tasks, critical for battery-powered IoT and mobile devices.

- Real-Time Adaptability: Neuromorphic chips excel in applications requiring online learning and adaptation, such as autonomous robotics and adaptive control systems.

Applications Transforming Industries

- Autonomous Vehicles: Neuromorphic processors enable vehicles to process sensor data with ultra-low latency and power, enhancing safety and decision-making.

- Healthcare: Portable neuromorphic devices support advanced brain-machine interfaces and wearable diagnostics, providing real-time monitoring and personalized therapy.

- Smart Sensors: Edge AI devices powered by neuromorphic chips perform complex pattern recognition with minimal energy, improving environmental monitoring and industrial automation.

- Robotics: Neuromorphic computing offers robots better sensory integration and adaptive behavior, making them more efficient in dynamic, unstructured environments.

Challenges to Overcome

- Programming Complexity: Developing software for neuromorphic systems requires new paradigms and tools that differ significantly from traditional programming.

- Standardization: Lack of common standards for hardware and software interoperability slows widespread adoption.

- Integration: Combining neuromorphic chips with existing digital infrastructure presents engineering and architectural challenges.

- Scalability: Scaling neuromorphic architectures to match the size and complexity of biological brains remains a long-term goal.

What Developers Should Know

- Emerging SDKs: Frameworks like Intel’s NxSDK and IBM’s Compass are making neuromorphic programming more accessible.

- Hybrid Approaches: Expect hybrid systems combining neuromorphic and classical processors to deliver practical performance benefits in the near term.

- Focus on Edge AI: Neuromorphic chips are especially valuable for low-power, real-time AI at the edge, so projects targeting IoT or robotics stand to benefit most.

- Learning Curve: Invest time in understanding neural coding principles and spiking neural networks to leverage neuromorphic hardware effectively.

Future Outlook

Neuromorphic computing is poised to become a cornerstone of next-generation AI systems by offering brain-like efficiency and adaptability. As research and commercial interest grow, we anticipate:

- Broader adoption in consumer electronics and IoT devices.

- Increased collaboration between neuroscientists, engineers, and AI researchers.

- New AI algorithms designed specifically for event-driven, spiking neural networks.

- Potential breakthroughs in artificial general intelligence (AGI) leveraging neuromorphic principles.

Conclusion

In 2025, neuromorphic computing represents an exciting frontier in future tech, promising to overcome current AI limitations and usher in smarter, more efficient machines. For innovators and developers, staying informed and experimenting with neuromorphic platforms can open doors to revolutionary applications.